As a developer, the first step for solving any bug is to reproduce it. This is an important step before the investigation actually begins. Once the issue is reproduced, the developer starts investigating the bug with the knowledge, tools and debugging skills at hand. The source of the problem is then determined, a fix is proposed and later checked in after completing the unit testing and other required processes followed by the team. This may sound very simple but there are obstacles at every step unless the bug is very trivial. This is routine work for any seasoned software developer.

One mistake developers often make is to jumpstart the debugging process sooner than needed. They fire up their favorite debugger, set the breakpoints and then start debugging when actually they should have first reduced the data set required for reproducing the bug.

The rule of debugging is very simple –

“Every developer should strive to reproduce the bug by hitting the required set of breakpoints the least number of times. This allows faster and efficient debugging sessions.”

So if you are hitting a breakpoint hundred times more than you should have, then you are either not using the debugger efficiently or you have not worked enough to reduce the data required to reproduce the bug.

The latter problem is more common as sometimes developers put in little effort to reduce the input data and start debugging prematurely. This leads to longer debugging cycles where a lot of time is wasted on investigating code that is not even relevant to the problem being solved.

For example, if a there is 1MB text file that is processed by your program and crashes it, then one should first try minimizing the text file such that if it is reduced any further, the crash goes away. The text file obtained in such a manner is the smallest input data set required to reproduce the crash. Once this goal is achieved, the program will now be processing lesser data and effectively less code will be executed which results in important breakpoints being hit fewer times.

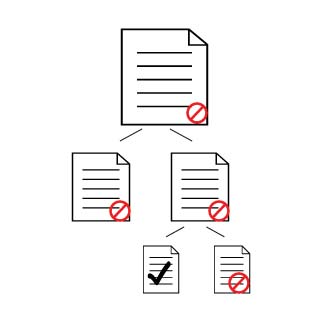

Well how does the developer go about reducing the input data set? There is no single method but the one that commonly works is to run a binary search on the input data. For example in the above case, the text file should be split into two. Two text files of half MB each would be obtained and one should test to see if the crash reproduces with either one of the files. If you still see the crash, you have halved the data set. Then the smaller file should be again split into two and the process should be repeated till a very small text file is obtained that still causes the crash to occur. Depending on what your program does, you can even reduce the 1 MB text file to a single character file. Debugging your program with a single character file is much simpler than using the initial 1MB file.

The Caveat

Sometimes a large data set may have multiple issues. By reducing it as described above, one may solve a partial problem but other problems may go unnoticed in the reduced set. Therefore once a bug has been solved on a reduced input data set, it should be tested against the one provided with the original bug. This ensures that no other issue that should have been fixed got ignored in a bid to made debugging more efficient.

Final Note

Reducing the input data is essential before starting the debugging process and a great productivity aid too. If possible, this should be a part of the bug reporting process for quality engineers or customers who often log the issue. Reducing the data may not always be possible but it is certainly worth an attempt.

I noticed your comment on my blog article about debugging. Did you ever read that debugging handbook? (cant remember the name exactly.) More real world war stories on debugging would be good whenever those are possible.

Thanks Tim. I haven’t had time to grab the book yet. Will try sharing more debugging experiences in future posts.

thx